My Professional Game Projects

Putting together a portfolio as an engineer is tricky. The screenshots you see below show the final product, but they don't reveal the architecture that makes it all work.

A beautiful game can run on spaghetti code, and a simple-looking project can be powered by incredibly elegant systems.

So, here's my attempt to show you both: the projects I've helped build, and the technical thinking that went into them.

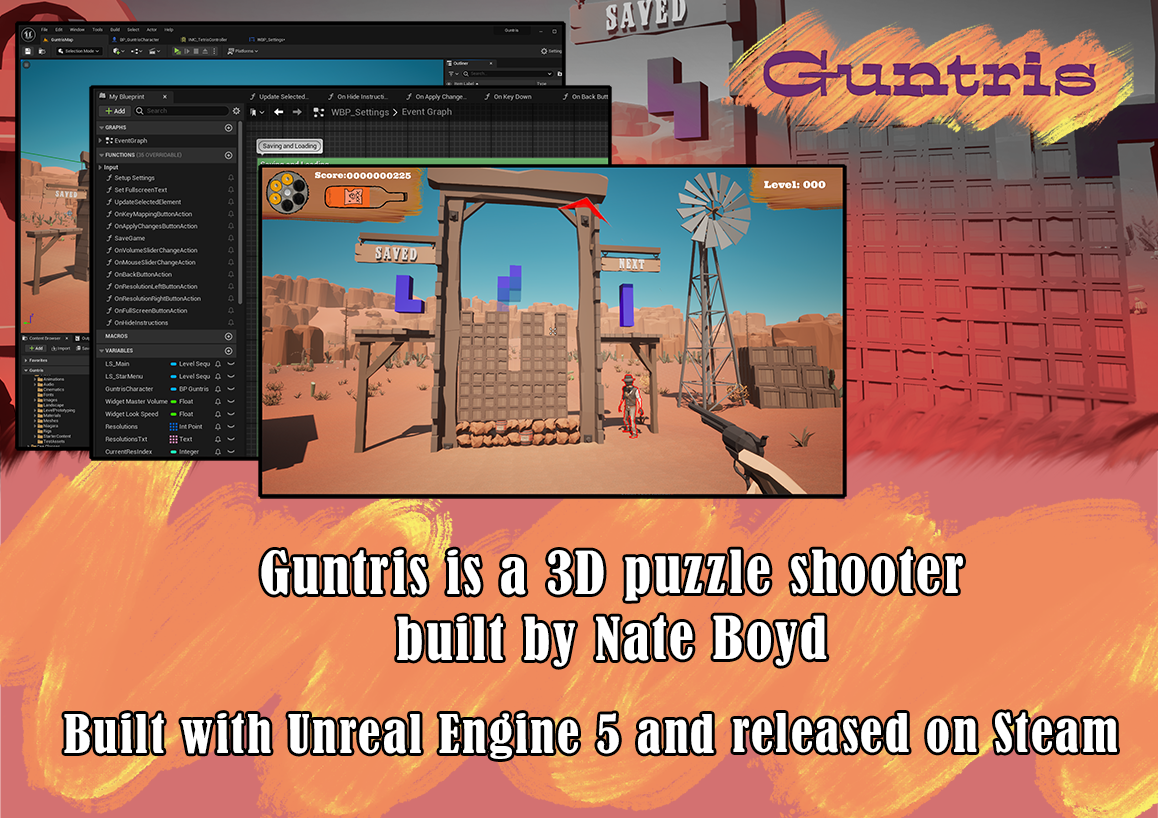

Guntris

I asked the important question that nobody was asking:

What if Tetris had a gun?

Key Features

- Unique Puzzle FPS that requires the player to both play tetris and shoot baddies.

- Clean low poly look where the majority of assets were made by myself custom-made. Some kit assets were utilized.

- Well polished accessibility features with a remapable control scheme and difficulty adjustment features.

Project Overview

Guntris, a portmanteu of Gun and Tetris, was my first independently produced game as a solo developer and was released on Steam September 6th, 2024.

It was developed over the period of 6 months. It was a project to help feel more comfortable with Unreal Engine 5 after developing in Unity for 5+ years, but it was also my first fully independent game as a solo developer.

The game features what you would expect from modern tetris. Save blocks, Bag Randomization, T Spins, it's all there. The factor that makes this game unique is the way I melded it with a First Person Shooter in order to create a puzzle-shooter hybrid.

The FPS mechanics work on two fronts: A traditional Shooting Gallery where you shoot bad guys, and shooting out tetris blocks to modify the game board.

The Shooting Gallery features enemy bandits spawning in a full 360 degrees around the player. These bandits will throw explosives at you that will add a row of junk to the tetris board. The players goal is to shoot both the bandit and any explosives that might be in-air.

Once that round of bandits is dealt with, the player should return back to the actively running tetris board and continue to play tetris. Using a gun, the player can shoot out pesky tetris blocks that are preventing combos or clear away junk blocks by shooting pulsating TNT barrels.

There are several points of friction that add to the fun factor here:

- Ammo Management Like real life, the revolver can hold a maximum of 6 bullets. Bullets are refilled by clearing lines of tetris. A full Tetris will refill ammo completely as a fun bonus.

- Whisky Meter Clearing lines and shooting bandits will fill up a whisky bottle in the HUD. When used, this whisky bottle will slow down time and give you infinite ammo to deal with an excess of bandits or tetris blocks

- Increasing Spawn Rate Like other tetris games, the more tetris lines that you clear, the faster the blocks drop down. In Guntris, this will also increase the enemy spawn rate AND it makes it more likely that enemies spawn in harder to reach locations.

The game was released on Steam to moderate success.

Technical Highlights

- Architected the dual-core gameplay loop using a component-based Unreal Engine 5 framework , implementing the Tetris simulation within a dedicated TetrisManager actor that synchronizes its state with the FPS arena via a decoupled event system, using delegates and interface calls to trigger enemy spawns and environmental events in real-time.

- Developed a unique Tetris FPS interaction system where the player must manage state of the playfield and defeat enemies in a 3D arena, creating a strategic link between the two gameplay pillars.

- Engineered a highly flexible input management system supporting fully rebindable controls for both the FPS and puzzle genres simultaneously, resolving input context conflicts and providing a seamless user experience.

- Implemented a dynamic time dilation mechanic that strategically slows the Tetris descent and enemy projectile speed, giving the player critical breathing room while maintaining full interactivity for tactical decision-making.

- Integrated with the Steam leaderboard system by designing a data schema to capture and validate complex multi-genre scores, combining Tetris line clears and FPS combat efficiency into a single competitive metric.

Picture This!

A game project that uses interactive murals to inspire creative and educational moments in primary care waiting rooms

Key Features

- Implemented principles of inclusive design, ensuring a engaging experience for both children and their parents.

- Developed a robust admin panel that exposes key game properties through a UI, enabling the care team to rapidly prototype and test therapeutic scenarios without developer intervention.

- Implemented a custom analytics system that logs gameplay events, allowing for data-driven analysis of player behavior, feature engagement, and progression.

Project Overview

Picture This! is a game developed in the style of Where's Waldo, where players will be asked questions about the mural on their phone or a tablet and have to find the answer in either a large in-room mural or a digital version of the mural.

For example, the player may be asked "Find the dog eating ice cream". If they found the dog eating ice cream, they would then touch the dog and be met with a congratulations screen. If they failed, they would be prompted to try again or be shown where the dog was.

The framework for the base game was simple enough: Build a question prompt, pair that question with a correct answer box that enclosed the correct place to touch. It was the additional requirements that added a fun challenge.

- The game had to collect research quality metrics.

This game was funded in part by a research grant. As such, the game was to collect a wide variety of data points and organize that information such that it was HIPAA-compliant. The data collection requirements were dictated to us by the research team, and it was built with the expectation that additional data points could be added at any time.

Collected data was able to be exported as Excel or JSON files.

- The game had to run under multiple environments and situations

We did not know exactly where Picture This would be implemented, so we built the game with operational flexibility in mind. We had a few operational modes: A full wall sized mural with a tablet kiosk, A mural that used a parents smartphone, a purely digital version, and a mural only version where staff would enter the recorded results afterwards.

The game supported multiple murals and languages as well.

- The game had to be customizable by the research team

A requirement of the project was that the research team would need to be able to modify aspects of the gameflow without having to require the help of a developer. They would need to be able to set up different game profiles. These profiles would contain the mural image, the questions that were asked, and their corresponding answer. They would also need the capability to do A-B testing between different game profiles.

Development had to be flexable in reacting to the COVID-19 pandemic, but overall the project was a great success:

-Article: CHOP pediatric center adds interactive murals that foster creativity

-Article: The Doctor Will See You, But First: Picture This!

-Podcast: Primary Care Perspectives: Episode 109 - Picture This: Incorporating Visual Art into Practice

Technical Highlights

- Architected a dynamic, data-driven game engine using React and Redux to manage complex application state, decoupling mural assets, question sets, and touch interactions. This allowed non-technical researchers to define and A/B test new therapeutic scenarios via a custom admin UI without requiring code deployments.

- Engineered a scalable, HIPAA-compliant data pipeline with an extensible schema to capture granular, research-quality metrics. The system was containerized with Docker and automated through Bitbucket Pipelines for CI/CD, ensuring secure, reliable data handling and export for academic analysis.

- Developed a flexible multi-modal deployment system supporting distinct operational environments (kiosk, personal device, staff-led) from a single codebase.

- Implemented a context-aware touch detection system using normalized coordinate mapping, managed centrally by Redux to handle state across different screen sizes and resolutions. This allowed a single mural asset to be accurately interacted with on everything from a tablet to a wall-sized display.

Wonka's World of Candy

The factory is always growing and evolving!

Key Features

- Innovative Match-3 Gameplay: Experience hundreds of new levels with unique objectives, from collecting chocolate to defeating the Oompa Loompas.

- Wonka's World: Explore iconic locations from the beloved franchise, including the Chocolate Room and Inventing Room, each with its own visual style and challenges.

- Special Candies & Boosters: Unleash a variety of powerful candies and Wonka-themed boosters to solve challenging puzzles and achieve high scores.

Project Overview

Wonka's World of Candy is a high-profile mobile match-3 game developed by Zynga, built upon a proprietary engine and supporting a live service for millions of players. Our studio was contracted to augment the core development team, tasked with designing and implementing new content and features to drive player engagement through regular updates.

As a Gameplay Programmer, I was a key contributor to the live-ops content pipeline, focusing on the dynamic "Wonka Factory" overworld and its associated event systems. My primary responsibility was implementing the logic for limited-time events, which involved modifying the behavior of the Oompa Loompa AI and creating unique progression mechanics that altered the overworld's rules. This required deep collaboration with Zynga's engineers to ensure our systems integrated seamlessly with their existing architecture.

A significant and valuable part of this project involved optimizing the game for the Kindle Fire platform, where I gained hands-on experience addressing the unique performance constraints and device fragmentation challenges of a more limited hardware ecosystem.

Additionally, I supported the game board team by developing and tuning special Match-3 mechanics for event-specific boards, ensuring new candies and obstacles functioned reliably within the established framework. Working directly with a major publisher on a title of this scale honed my ability to write robust, maintainable code in a complex, live-service environment and solidified my passion for creating engaging gameplay experiences for a massive audience.

Technical Highlights

- Developed and integrated new level objectives within Zynga's proprietary framework built on Unity, leveraging existing systems to create unique win conditions while maintaining stability and performance.

- Implemented new special candies and board mechanics by extending the game's core match-3 logic, ensuring they interacted correctly with all existing game elements and followed the established design patterns.

- Collaborated directly with Zynga's internal team to integrate new content into the live build, adhering to their technical standards, version control protocols, and deployment pipelines.

- Authored robust C# code for new gameplay features, focusing on readability, scalability, and ease of use for the design team to configure and tune.

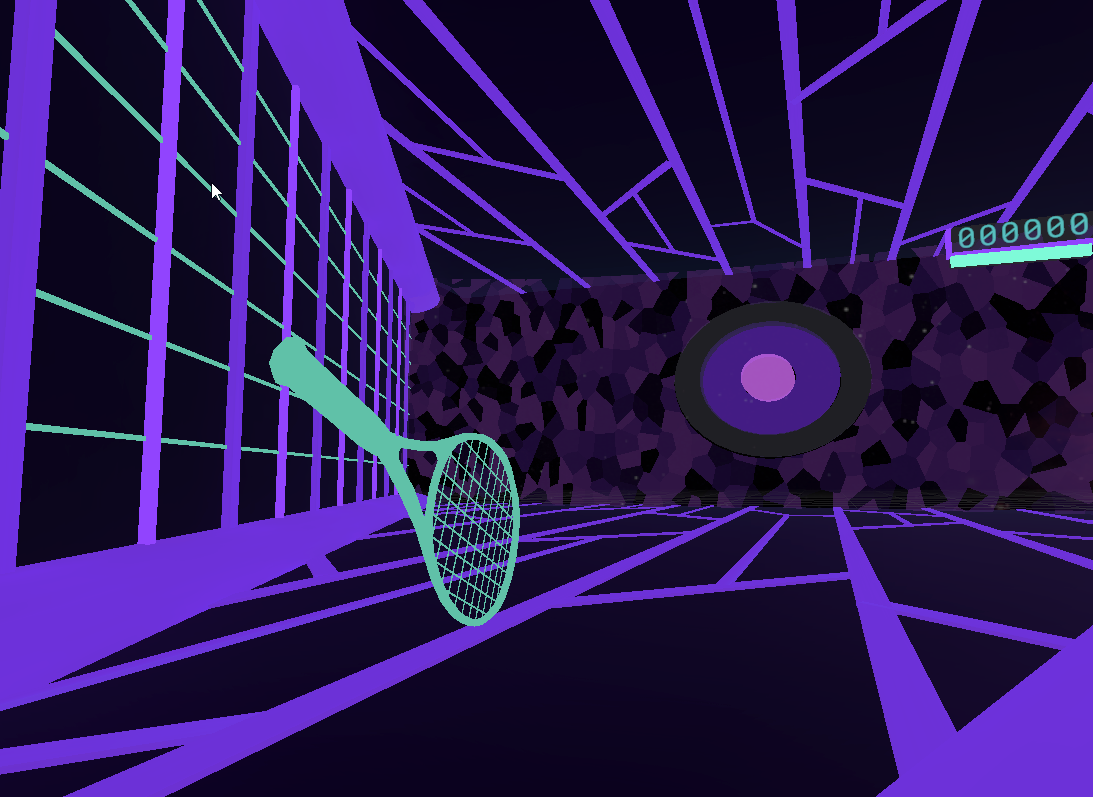

Cyber Hyper Mega Ball

Why play boring racket ball when you can play Cyber Hyper Mega Ball?

- A VR racket ball game made for the Oculus Quest

Key Features

- VR-First Comfort & Immersion Systems Engineered a suite of player comfort features, including a dynamic boundary and customizable peripheral vignette, to ensure accessibility and prevent motion discomfort during intense gameplay.

- Dynamic Time Dilation for Pacing & Fun Implemented a strategic slow-motion system activated on difficult returns, empowering players to execute precise hits and dramatically increasing the game's "power fantasy" and engagement.

- Precision Physics-Based Racket Interaction Developed a custom physics system for realistic ball trajectory based on racket velocity, angle, and impact point, creating a highly responsive and skill-driven core mechanic.

Project Overview

Cyber Hyper Mega Ball is my passion project and first VR game, a journey that started during a Global Game Jam and ended with a full release on the Oculus Quest.

It all began with a 48-hour Global Game Jam project that, to my surprise, got a ton of positive feedback at the Portland Indie Game Squad showcase. I'm a sucker for encouragement, so that was all the motivation I needed to spend the next few months turning that rough prototype into a polished game.

So, what is it? Imagine a futuristic racketball match. You're in a VR room with a glowing toxic ball and a racket. Your job is to smack the ball at a giant dartboard-style target on the wall. The closer to the bullseye you hit, the more points you score.

The twist? It starts with one ball, but soon more start spawning. More balls mean more scoring chances, but also more risk. If any ball gets past you and hits the back wall, you lose a life. Lose three, and it's game over.

To keep this chaos from getting overwhelming, I built in a few clever systems:

- A "Bullet Time" Meter: A bonus builds up as you play, letting you slow down time temporarily. This gives you a breather to line up your shots. The moment you hit a ball, it zooms back to full speed.

- Fun-First, Not Realism: I tweaked the physics to be fun, not accurate. There's no gravity, speeds are tuned to feel just right, and the balls have a slight homing tendency to help less experienced players still feel like champions.

- Controlled Chaos: There's a cap on the total number of balls. Once that limit is hit, the existing balls start speeding up, creating a thrilling endgame that keeps even skilled players on their toes.

While it's a simple game, it was a fantastic experience to develop. I had a fantastic group of test users to help manage the unique set of challenges that come with creating a VR game.

Technical Highlights

- Architected a custom, fun-first physics system that replaced realistic gravity and forces with tuned parameters for ball speed, a target-homing bias, and a zero-g environment, prioritizing consistent and satisfying gameplay over pure simulation.

- Implemented a dynamic time-warp system controlled by a player-earned bonus meter, allowing for strategic slow-motion to manage on-screen chaos without pausing the game state or breaking player immersion.

- Developed a scalable object pool and ball manager to handle the increasing number of active balls, enforcing a performance-friendly cap and introducing a dynamic speed-scaling mechanic to maintain a high skill ceiling.

- Built the entire experience for the Oculus Quest using Unity's XR Interaction Toolkit, optimizing performance and refining the racket interaction for a comfortable, high-fidelity standalone VR experience.

E.T Mixology

My favorite Game Jam project

Key Features

- Alien Language Deciphering: Engage in a unique puzzle loop of observing alien patrons and deducing the meaning of their symbolic language through experimentation.

- Complex Attribute-Based Logic: Each customer is defined by four distinct attributes (Color, Movement, Symbol, Location) that combine to create a vast array of possible drink orders.

- Rapid Iteration Gameplay: The intuitive "guess-and-check" mechanics allow for quick hypothesis testing and learning, creating a satisfying "aha!" moment with each solved order.

Project Overview

E.T. Mixology is a puzzle game created during the 2018 Global Game Jam, where the core challenge is deciphering an alien language to become the galaxy's best bartender. As the Lead Programmer on a four-person team, I was responsible for architecting and building the core gameplay systems in Unity over the 72-hour development period. The project's success and the technical design decisions I made were directly credited with helping me secure my first professional role as a Software Engineer at Bad Rabbit.

Players interact with a series of alien patrons, each defined by a unique combination of four attributes: Color, Movement, Symbol, and Location. Through observation and trial-and-error, the player must deduce the meaning behind these symbols to mix the correct cocktail. For instance, a "green triangle" might require a triangular olive, while a "blue squiggle that shakes" translates to adding blue fluid and shaking the drink. This project was a crash course in building an intuitive, systemic puzzle loop under extreme time constraints.

Technical Highlights

- Architected a data-driven alien order system using Scriptable Objects in Unity, allowing for the flexible and rapid creation of new patrons and recipe combinations without hard-coding logic.

- Developed a scalable symbol-attribute mapping system to define the relationships between alien characteristics and cocktail components, forming the logical backbone of the puzzle gameplay.

- Led a 4-person team under a tight 72-hour deadline managing source control with Git and ensuring clean, documented code for seamless collaboration between programming and art disciplines.